The Secret Sauce Behind Zero-Shot vs Few-Shot Prompting

We'll explore what these terms mean and when to use each approach.

When talking about AI prompting, two terms get tossed around a lot: zero-shot and few-shot prompting.

Both are powerful techniques for guiding AI models, but they work quite differently.

Understanding the distinction helps you pick the right approach for your task… saving time, improving accuracy, and making your AI output more reliable.

Let’s break down what zero-shot and few-shot prompting really mean, when to use each, and how to get the best results from both.

What is Zero-Shot Prompting?

Zero-shot prompting is the simplest and most straightforward technique.

You give the AI a direct instruction or question, with no examples or demonstrations.

The model uses everything it has learned during pre-training to generate a response.

Because there’s no need for labeled data or extra prep work, zero-shot prompting is:

Extremely fast and scalable.

Ideal for general tasks where the AI’s broad knowledge applies.

Useful when you need a quick answer without complex formatting.

Examples include:

Answering trivia or factual questions.

Basic text summarization.

Translating simple sentences.

However, zero-shot can sometimes give generic or inconsistent answers, especially when tasks require nuance or very specific output formats.

What is Few-Shot Prompting?

Few-shot prompting sits between zero-shot and full model fine-tuning.

Here, you provide the AI with a handful of input-output examples within your prompt before asking it to perform a new but related task.

This “in-context learning” helps the AI understand exactly what style, format, or reasoning you want.

Few-shot prompting benefits include:

Improved accuracy and task-specific performance.

Ability to handle specialized or nuanced domains where generic knowledge isn’t enough.

Flexibility to shape output style without retraining the whole model.

Few-shot is especially valuable when:

You want outputs in a consistent format (e.g., coding snippets, legal summaries).

The task requires specific reasoning patterns or logic.

You have a small number of labeled examples but can’t fine-tune the model fully.

The trade-off? You spend time curating good examples and formatting the prompt correctly.

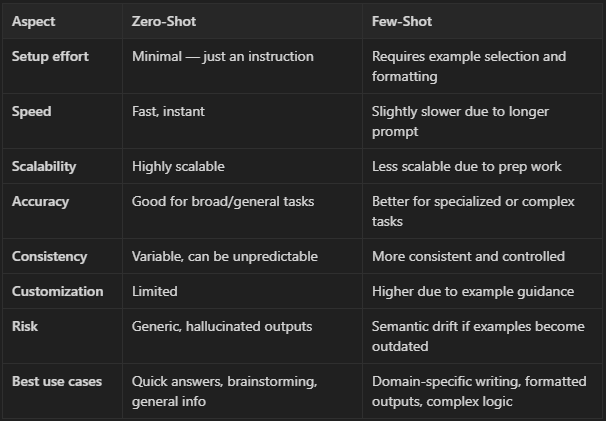

Comparative Analysis: When to Use What?

In practice:

Use zero-shot when you want quick, general-purpose results with zero setup.

Use few-shot when accuracy, consistency, or domain specificity is critical.

How to Craft Effective Few-Shot Prompts

Crafting a strong few-shot prompt isn’t just about tossing in examples.

Consider these best practices:

Choose clear, high-quality examples: Each example should be easy to understand and directly related to your task.

Ensure relevance and diversity: Use examples covering typical and edge cases to help the model generalize better.

Maintain consistent formatting: Present input-output pairs in a uniform way, signaling structure.

Keep examples concise: Too many or overly complex examples can confuse the model or hit token limits.

Order matters: Place examples before the new prompt so the AI learns the pattern first.

For example:

Example 1: “Input: 5 + 3, Output: 8”

Example 2: “Input: 10 - 7, Output: 3”

Now solve: “Input: 6 × 4, Output:”

This clarity and structure boost the AI’s ability to follow your intent precisely.

Challenges and Pitfalls

Despite their power, both approaches have caveats.

Semantic schema drift is a risk unique to few-shot prompting, over time, as contexts or task requirements evolve, your carefully chosen examples may become irrelevant or misleading.

Models can also overfit to examples, generating outputs too similar to them rather than generalizing well.

On the other hand, zero-shot prompting can produce:

Hallucinated or vague responses due to lack of specific guidance.

Inconsistent tone or format, especially for multi-turn or complex tasks.

Mitigating these risks requires regular review, updating prompts, and combining techniques when appropriate.

Practical Tips and Examples

Try these side-by-side examples:

Zero-shot:

“Translate the sentence ‘I love AI’ into Spanish.”

Output: “Yo amo la IA.”

Few-shot:

“Example 1: ‘Hello’ → ‘Hola’

Example 2: ‘Goodbye’ → ‘Adiós’

Now translate: ‘I love AI’.”

The few-shot prompt reinforces the pattern, which can help with less straightforward tasks.

Use cases illustrating the impact:

Customer support: Few-shot prompts improve consistency and tone matching to brand voice.

Creative writing: Zero-shot prompts generate broader ideas, but few-shot prompts steer style and structure.

Data extraction: Few-shot examples help AI accurately pull key info from messy documents.

Conclusion

Both zero-shot and few-shot prompting are powerful… but serve different needs.

Zero-shot is your go-to for fast, general tasks with no prep.

Few-shot shines when accuracy, format, and domain expertise matter.

Learning to balance these, experimenting with examples, and continuously refining your prompts will unlock AI’s true potential.

If you want to work smarter with AI, not harder... follow LLMentary, and share this with a friend who’s curious about prompt engineering.

Stay curious.

Share this with anyone you think will benefit from what you’re reading here. The mission of LLMentary is to help individuals reach their full potential. So help us achieve that mission! :)