MCP: The AI Translator that lets LLMs talk to external tools

Find out how LLMs can do more than just 'talk back' to you.

Ever wish your LLM could do more than just talk?

Like, actually fetch your calendar, check real-time data, or analyze a spreadsheet?

The truth is: today’s AIs are great at generating ideas… but terrible at doing things.

They don’t natively “talk” to other apps or software tools.

That’s the real blocker.

And MCP might just be the fix.

Let’s break it down.

🧠 The Problem: Smart AI, But Stuck in a Box

Imagine you ask ChatGPT:

“How many users signed up last week?”

It might guess. It might hallucinate. But it can’t actually pull that number from your company database.

Why?

Because LLMs don’t come with built-in access to your tools, APIs, or files.

To bridge that gap, developers have to write custom wrappers, connectors, and scripts, again and again, for every use case, for every app.

It’s slow. Repetitive. And wildly inefficient.

Enter: MCP: the Model Context Protocol.

A new standard that lets AI talk to external tools… using shared, reusable templates.

📡 So, What Is MCP? (In Plain English)

Let’s keep it simple:

MCP is like an API standard… but for AIs.

It’s a communication framework that helps large language models (LLMs) interact with external tools (databases, browsers, spreadsheets, scrapers, even other apps) in a predictable, reusable way.

Think of it like this:

📝 Before MCP

You’re filling out a form. But every office wants a different format. One wants a PDF, one wants a Word doc, one wants it handwritten. Total chaos.

📂 With MCP

There’s a single universal form everyone accepts. It works everywhere.

MCP is that form… the translator that makes it easy for AIs to talk to all kinds of apps, databases, or APIs.

But it’s not just a translator. It’s a standardized channel that allows developers to:

Build once, use many times

Share templates across apps

Plug tools into any LLM-based product without rewriting glue code every time

The MCP protocol was conceived and built by two software engineers at Anthropic a leading AI-development company and the creator of one of the best LLM models, Claude.

Thanks to its growing adoption (especially with even OpenAI on board), it’s quickly becoming the go-to protocol for connecting AI to real-world action.

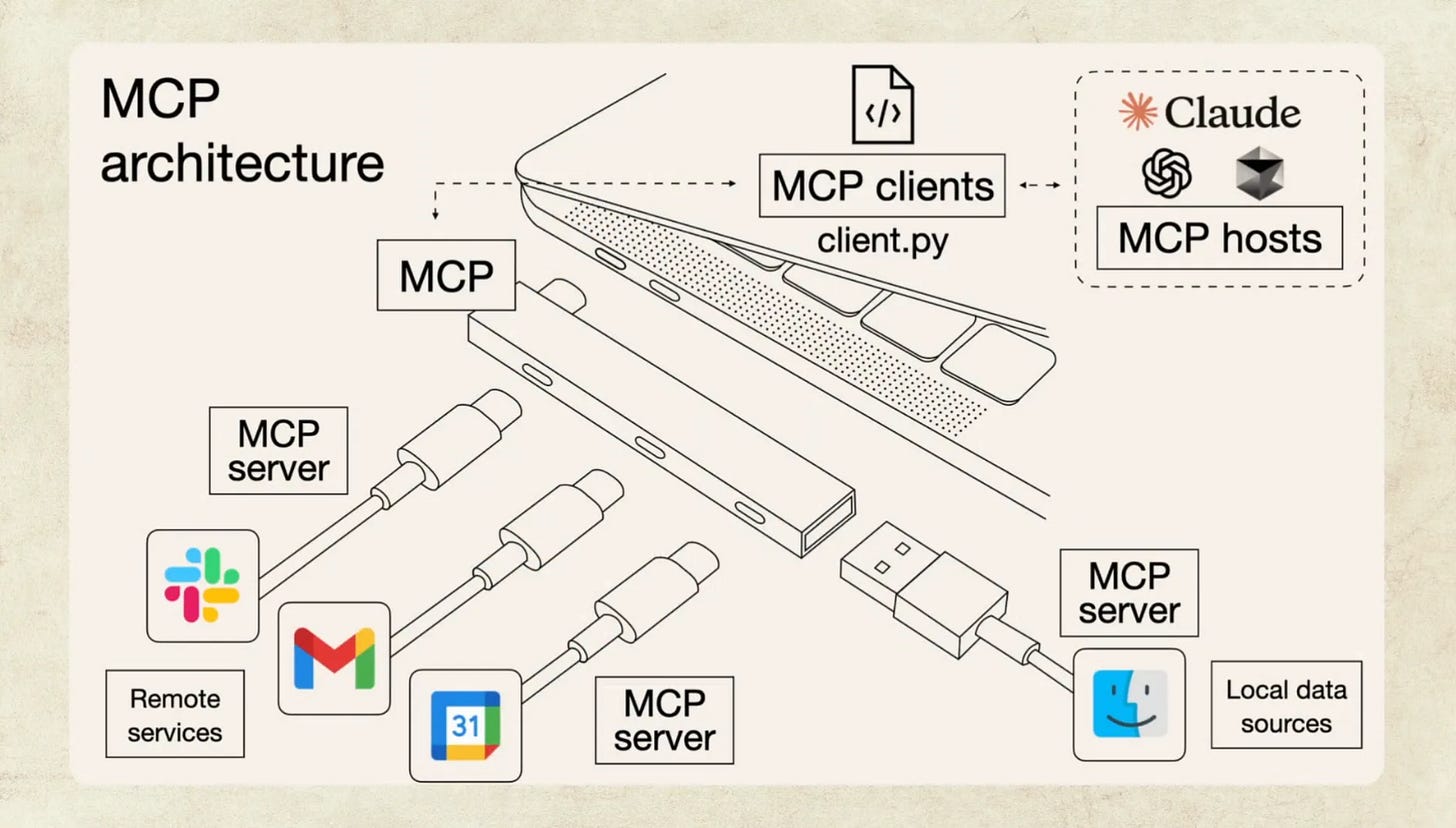

🎭 Meet the Cast: Host, Client, and Server

MCP has three main roles. Think of them like parts of a delivery system.

Here’s who does what:

1️⃣ The MCP Host

This is your AI interface: the application that users interact with. It’s where the prompt is typed, and where the output appears.

🧠 Examples of Hosts:

ChatGPT

Claude

An AI-powered customer support bot

A custom AI dashboard inside your CRM

An agent builder UI like LangChain or AutoGen

This is the “face” of the AI. But it doesn’t know how to talk to tools by itself.

2️⃣ The MCP Client

This is the behind-the-scenes messenger. It takes what the host says, turns it into the right format, and sends it to the tool.

📦 Examples of Clients:

A script that translates user intent into a SQL query format

A middleware layer that knows what input a scraper needs

A Python function that builds a request payload

A TypeScript SDK call that activates a web action

A connection library built on the MCP standard

Think of it as your AI’s personal assistant… fluent in both AI language and tool language.

3️⃣ The MCP Server

This is where the actual action happens. The server talks to the tool, fetches the data, performs the task, and sends the result back up the chain.

🔌 Examples of Servers:

A database endpoint that runs queries

A Selenium bot that scrapes a web page

A function that triggers a Notion update

A machine learning model that does image analysis

A tool that sends Slack messages or emails

The server doesn’t need to know anything about the AI. It just does its job… and sends the results back.

Together, these three parts (Host, Client, Server) create a smooth pipeline from “ask” to “action.”

🔄 Let’s Walk Through It: One Request, All the Way Through

Say you type into an AI assistant:

“Show me how many new users signed up last week and email me a summary.”

Here’s what happens behind the scenes (with MCP):

The Host (Claude) reads your request and identifies it needs external help

The Client jumps in: it creates two requests:

One to a database server for the signup numbers

Another to a messaging server to draft and send the email

The Servers take over:

The database server runs the query: “SELECT count(*) FROM users WHERE signup_date > last_week”

The email server formats the result into a short message and sends it

The results are returned to the Host, which then replies with:

“112 users signed up last week. A summary has been emailed to you.”

It looks simple on the surface.

But under the hood, a whole network of components just cooperated to get the job done.

And here’s the kicker:

Each of those components (client logic, server actions) could be reused in hundreds of different AI apps.

That’s the power of MCP.

💥 Why This Changes the Game for AI Developers

Let’s talk real impact:

🔁 Reusability

No more writing custom wrappers for every new tool. With MCP, developers can plug in pre-built connectors and just go.

🌐 Community Templates

Want to integrate with a calendar app, CRM, or SQL database? Someone may have already built that MCP server. You just connect.

⚙️ Multi-Language SDKs

Anthropic released SDKs for Python, Java, TypeScript, and more. Meaning developers across stacks can start building MCP-compatible systems easily.

⚡ Speed

Adding a new capability (like data lookup or web scraping) could take hours, not days or weeks. And testing is faster too.

In short:

It standardizes the hardest part of AI integration.

So devs can focus on creativity, not plumbing.

Today, there’s an entire marketplace of MCPs, from which developers can pick and choose already created protocols for tools like Notion, Reddit and even LinkedIn! This allows devs to easily integrate LLMs to communicate with external products to being the best use cases into existence!

🚧 What’s the Catch? (For Now)

MCP isn’t perfect. Yet.

Like any new protocol, it comes with growing pains:

🐞 Bugs and edge cases: Not all tools behave the same way. Expect hiccups.

🛠️ Limited coverage: Many tools still need dedicated MCP wrappers built.

🧪 Evolving spec: MCP2 is new, and the ecosystem is still developing.

But that’s also what makes this exciting.

OpenAI’s recent support for MCP2 in their SDKs is a big signal.

When major players adopt a protocol, standards start to solidify.

And once the ecosystem reaches critical mass… we’ll see a Cambrian explosion of agentic AI products that just work.

🔚 Final Thought: Connecting the Dots, Literally

Prompting was the first big unlock in AI.

But real-world usefulness? That needs action.

And action needs connection.

That’s why MCP matters… it’s not just another dev tool.

It’s the bridge that lets smart models plug into the real world.

So the next time you hear about an “AI agent that books your flights” or “ChatGPT that runs spreadsheets”…

Remember: it’s probably MCP doing the heavy lifting.

Next on LLMentary:

Now that you have a basic understanding of what MCPs are, its like discovering that your LLMs have ‘hands’ to ‘make actions’ rather than just a ‘mouth’ to ‘talk’ to you. In my next post, we’ll explore how these ‘hands’ empower LLMs with their new-found superpower to actually execute certain actions i.e. called AI Agents. Sound familiar? :)

Until then, stay curious.

Share this with anyone you think will benefit from what you’re reading here. The mission of LLMentary is to help individuals reach their full potential. So help us achieve that mission! :)