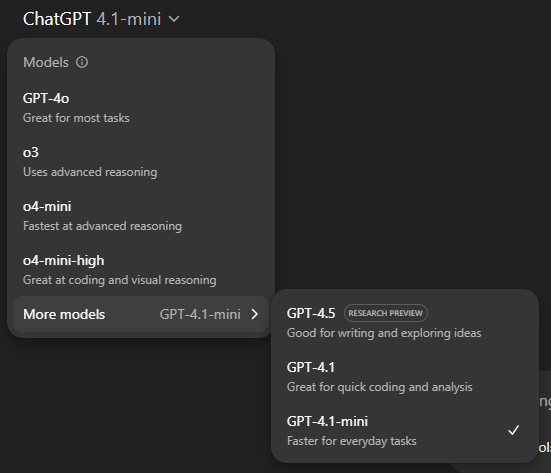

Which latest ChatGPT model should you use? A Quick practical comparison

Choosing the right ChatGPT model isn’t just a tech detail.

Choosing the right ChatGPT model isn’t just a tech detail.

It shapes everything from how quickly you get answers to the quality of those answers, and even the cost of your AI use.

With multiple versions floating around (GPT-3.5, GPT-4, GPT-4 Turbo, GPT-4o, GPT-4 with browsing), it’s easy to get confused.

This post breaks down the essentials you need to know, so you can pick the perfect model for your needs, without overpaying or sacrificing quality.

Why Choosing the Right Model Matters

Not all ChatGPT models are created equal. Each has unique strengths, weaknesses, and price points. Picking the wrong one can slow you down, cost more, or leave you frustrated with poor results.

By understanding what each model does best, you’ll save time and money and get the answers you actually need.

Meet the Models: Clear, Simple Descriptions

Here’s a quick intro to the main ChatGPT models:

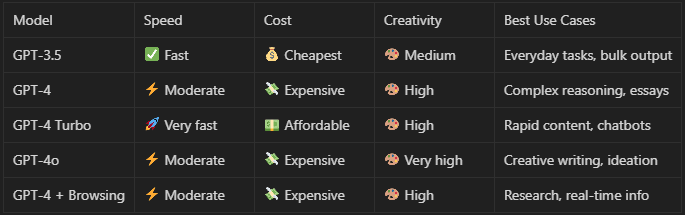

GPT-3.5: The reliable workhorse. Fast, affordable, perfect for everyday tasks like emails, simple writing, and basic Q&A.

GPT-4: The smart generalist. More thoughtful, better at complex questions, logical reasoning, and nuanced conversations.

GPT-4 Turbo: Like GPT-4’s speedy sibling. Faster and cheaper, great for bulk tasks or rapid back-and-forth.

GPT-4o: The creative specialist. Ideal when you want deep insights, storytelling, or imaginative content.

GPT-4 with Browsing: The researcher. Can look up the latest info online for up-to-date answers.

Cheat Sheet: Model Comparison at a Glance

Use Cases: Matching Models to Tasks

Routine writing & quick summaries: GPT-3.5 is perfect for drafting customer support emails, generating meeting minute summaries, or creating product descriptions for e-commerce listings quickly and affordably.

Deep reasoning & detailed content: GPT-4 excels at writing detailed market analysis reports, drafting complex legal documents, or solving multi-step math problems that require careful logic.

High volume, fast turnaround: GPT-4 Turbo works well when generating hundreds of personalized marketing emails, producing daily social media post ideas, or powering live chatbots that need quick responses at scale.

Creative projects & brainstorming: GPT-4o is ideal for crafting imaginative short stories, brainstorming unique campaign concepts for brands, or generating poetic language for artistic projects.

Research or news updates: GPT-4 with browsing shines when summarizing the latest scientific studies, tracking breaking news events, or pulling up-to-date regulatory information for compliance teams.

Cost vs. Value: When to Invest More

Choosing a ChatGPT model isn’t just about picking the “best” one… It’s about balancing cost against performance for your specific needs.

GPT-3.5 is the cheapest option and great for high-volume, straightforward tasks like drafting quick emails, summarizing simple texts, or handling routine customer questions. Its lower cost makes it perfect for startups or hobbyists with tight budgets. But it can struggle with nuance or complex reasoning.

GPT-4 and GPT-4o deliver better reasoning, creativity, and understanding. They excel in writing detailed reports, brainstorming complex ideas, coding, and handling multi-step instructions. If quality matters (for example, in client proposals, technical documents, or creative content), these models justify the extra expense.

GPT-4 Turbo offers a sweet spot: it’s faster and less costly than standard GPT-4 but maintains a similar quality level. This makes it ideal for scaling content production or rapid prototyping, where you want both speed and strong outputs.

Practical tip: Start with GPT-3.5 for your initial drafts or simple tasks. For final outputs or tasks requiring deeper insight, upgrade to GPT-4 or GPT-4o. This way, you optimize both cost and quality.

Prompting Tips for Each Model

How you ask matters… and different models respond best to different prompt styles.

GPT-3.5: This model performs best with clear, concise prompts. It prefers straightforward questions or commands without too much complexity or context. For example, instead of “Explain quantum physics to me and its implications for AI,” try “What is quantum physics in simple terms?” Keep instructions simple and direct.

GPT-4 and GPT-4o: These models love detailed, multi-part prompts. They excel when you provide context, set constraints, or ask for step-by-step reasoning. For instance, a prompt like “Explain the basics of blockchain, then discuss its impact on finance and possible risks” will yield a more comprehensive answer.

GPT-4 Turbo: Since it’s designed for speed and cost efficiency, you can experiment more freely here. Give it longer prompts or ask for multiple variations to see what sticks. Its robustness allows for playful, creative prompting without slowing your workflow.

Browsing-enabled GPT-4: When you want up-to-date info, be explicit about it. For example: “Using current web data, summarize recent developments in renewable energy policies.” Also, ask for sources to verify information.

Quick hack: When switching models, adjust your prompts accordingly instead of using a one-size-fits-all approach. Tailoring prompts boosts clarity and output relevance.

Advanced Strategies: Combining Models for Efficiency

If you want to be smart with your time and budget, use a multi-model workflow, mixing models to get the best balance of cost, speed, and quality.

Example workflow:

Step 1: Drafting

Use GPT-3.5 to generate rough drafts, outlines, or multiple ideas quickly. It’s cost-effective and fast, perfect for producing large volumes.

Step 2: Refining

Pass your drafts to GPT-4o for polishing, creative enhancement, and nuanced rewriting. It adds depth and style.

Step 3: Scaling

When you need to produce many similar outputs or engage in live chat, use GPT-4 Turbo to handle volume without sacrificing quality.

Step 4: Research and Fact-Checking

Use GPT-4 with browsing to verify facts, pull in real-time data, or generate content based on the latest information.

This approach is common in content marketing, product management, and customer support, where quality and efficiency both matter.

Pro tip: Automate these handoffs if you’re building an AI-powered app or workflow. Many platforms let you connect different models programmatically.

Common Mistakes and How to Avoid Them

Mistake 1: Always using the most expensive model

People often default to GPT-4o or GPT-4 browsing for everything, assuming “newer is better.” But that can balloon costs unnecessarily. Match the model to task complexity instead.

Mistake 2: Treating all prompts the same

Sending simple prompts to GPT-4o or overly complex ones to GPT-3.5 reduces effectiveness. Adjust your prompt style per model for best results.

Mistake 3: Ignoring model limitations

Even GPT-4 variants hallucinate sometimes or miss context in very long tasks. Over-relying on a single model without human review can cause errors.

Mistake 4: Forgetting to leverage browsing or file uploads

Many users don’t enable these features and miss out on real-time data or document analysis, limiting their AI’s usefulness.

Mistake 5: Not monitoring usage costs

Without tracking, high-volume use of expensive models can lead to unexpected bills. Set budgets and alerts if you’re using paid plans.

How to avoid: Educate yourself on each model’s sweet spots, experiment with smaller tasks, and build workflows that blend models efficiently.

Final Thought: Know Your AI Tools, Work Smarter

Understanding ChatGPT’s models lets you get exactly what you need — faster, cheaper, and better.

Try experimenting with different models next time you use ChatGPT. Notice how your results change.

Want help crafting prompts or workflows tailored to each model? Let me know!

Which model do you find yourself using most… and why? Drop a comment!

Next up on LLMentary:

Now that you’ve had a good opportunity to explore ChatGPT for what it offers, let’s take another step back and look at the larger general set of LLMs available today, apart from ChatGPT, like Claude, Grok, Llama, etc. We’ll look at what models exist, what’s best used for what purpose, and, most importantly, how to evaluate different models!

It’s a vast ocean of models and use cases, and I’m excited to explore them with you!

Until then, stay curious.

Share this with anyone you think will benefit from what you’re reading here. The mission of LLMentary is to help individuals reach their full potential. So help us achieve that mission! :)