Beyond Prompt Engineering: Next steps for a smarter LLM

Prompting isn't the endgame, learn what it means to 'fine-tune' your LLM!

You’ve seen how Prompt Engineering can supercharge the output of LLMs to make them more relevant, meaningful, and even seem smarter than your average Joe!

Although Prompt engineering made AI accessible, prompts alone cannot give you reliable, accurate, or fresh enough results!

You’ve probably noticed this already: ChatGPT gives outdated information, generic answers to specialized questions, or confidently incorrect responses.

It’s not that your prompts are necessarily bad.

It’s that prompting alone has limits.

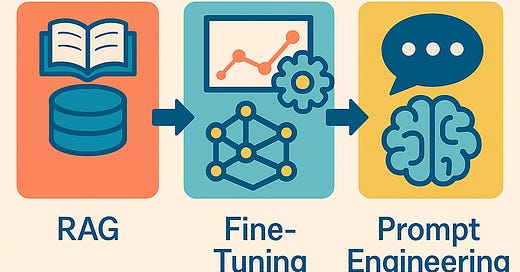

Today, let’s talk about two powerful upgrades: Fine-tuning and Retrieval-Augmented Generation (RAG), that dramatically improve AI outputs beyond just good prompting.

And don’t worry, we’ll keep it tech-jargon-free.

🔍 Why You’re Hearing About This Now

Prompt engineering has been a game-changer.

Suddenly, everyone can leverage AI to answer questions, draft content, or brainstorm ideas… just by knowing how to ask the right questions.

But prompting alone can only take you so far.

That’s where Fine-tuning and RAG step in.

Think about your AI assistant like an intern. Good instructions (prompts) help them perform tasks reasonably well. But what if the intern lacks specific expertise or up-to-date knowledge?

Fine-tuning means taking a general-purpose AI (like ChatGPT) and training it more on specialized data to deeply understand a specific topic or task.

Think of fine-tuning as hiring an intern who already has great general skills, but then giving them targeted, intense training on exactly what your team or company needs. They become experts tailored specifically to your context.

On the other hand, Retrieval-Augmented Generation (RAG) gives your AI real-time access to the latest data sources, allowing it to pull in current, accurate information instantly.

Think of RAG as giving your intern instant access to a comprehensive library… so they never need to guess or memorize. They can simply look things up immediately whenever needed.

Both of these go beyond prompting, turning your AI into something significantly smarter and more reliable.

🧩 The Three Layers of a Great LLM Output

Think of generating good AI responses like preparing a delicious meal. It depends on three things:

Ingredients (Data):

Fresh, accurate ingredients make better dishes. Old or stale ingredients (outdated data) weaken your results.

Recipe (Training):

Even great ingredients become a bad meal if you don't cook correctly. Proper training ensures your AI knows exactly how to combine data to produce meaningful results.

Presentation (Prompting):

No matter how delicious the dish, poor presentation (unclear prompts) makes the experience less satisfying.

Each of these layers matters… a lot.

🔧 How to Improve Each Layer

🌱 Data Layer: Keep Ingredients Fresh with RAG

Most AI models have a knowledge "cutoff" date. Usually months or even years in the past.

Without updated data, the model assumes outdated facts are still true.

RAG solves this clearly:

It allows your AI model to perform real-time lookups using external knowledge bases and databases.

Scenario example:

Without RAG, your AI thinks it’s still 2022. With RAG, it can check today's facts directly, giving up-to-date answers instantly.

Real-world RAG example:

AI-powered chatbots referencing your latest company documentation, support articles, or sales numbers in real-time, giving users precise, accurate information.

🎓 Training Layer: Sharpening the Recipe through Fine-tuning

General-purpose models might know a little bit about a lot of things, but often they lack the deep understanding necessary for specialized tasks.

Fine-tuning solves this:

It involves additional training on your specific data (like product information, customer scenarios, or even medical records) so the AI becomes an expert in your particular context.

Scenario example:

Without fine-tuning, your AI gives generic customer support responses. With fine-tuning, it understands precisely how your product works, common issues your customers face, and provides accurate, detailed solutions tailored specifically to them.

Real-world fine-tuning example:

A customer support AI trained deeply on company FAQs, chat transcripts, and support tickets, capable of giving consistent and contextually accurate answers without guesswork.

🎨 Prompting Layer: Plating Matters with Prompt Engineering

Great ingredients and a carefully executed recipe can still underwhelm if poorly presented. Similarly, even an expertly fine-tuned and updated model needs clear, precise instructions to perform its best.

You’ve already mastered the art of Prompt Engineering, right?

If not, you can read my earlier posts here to understand and apply what you learn to make better prompts, and get the most out of an LLM via good-quality prompts.

But here’s a brief recap:

Clear, structured, and context-specific prompts significantly enhance the usability, clarity, and effectiveness of AI-generated outputs.

Scenario example:

Just like beautifully plating a dish, well-crafted prompts ensure your AI responses are clear, precise, and user-friendly.

🚦 Why Each Layer Needs a Closer Look

Let’s look carefully at why improving each layer directly upgrades your AI outputs:

Data Layer (RAG):

Fresh data equals accurate answers and real-time reliability. Without updating your data, even perfectly prompted responses quickly become outdated or irrelevant.

Training Layer (Fine-tuning):

Specialized training means better accuracy, deeper understanding, fewer hallucinations (incorrect confident answers), and consistently reliable outputs. Without fine-tuning, the model tends to give generic answers and can easily misunderstand your specific use case.

Prompting Layer (Prompt Engineering):

Well-crafted prompts deliver clarity, precision, and better user experiences. Even a finely-tuned, updated model can give poor results without thoughtfully structured prompts.

Each improvement multiplies:

Better data feeds smarter training; smarter training empowers clearer prompting. All three combined lead to reliably excellent results.

🌟 Quick-start Tips

Ready to level up your AI? Here’s exactly how you can start improving each layer today:

Prompt Engineering:

Always give clear context and specific instructions. For example:

“You’re drafting an email to an HR manager. Keep it professional, empathetic, and concise.”

RAG (Retrieval-Augmented Generation):

Start by integrating your AI with real-time data sources or regularly updated knowledge bases, such as:

Internal documentation platforms (e.g., Notion, Confluence, Airtable).

Company websites and blogs containing product updates and announcements.

Specialized databases, industry reports, or news feeds relevant to your domain.

Then, configure your model so it can query these sources dynamically whenever prompted. When a question comes in, your AI will search these external documents or databases on the fly, retrieve the freshest information, and use it directly in its response. This could be as simple as uploading a PDF into ChatGPT and asking it to refer to the document before answering.

Think of it as giving your AI a built-in research assistant… ensuring it always replies with the most current and accurate facts available.

Fine-tuning:

Begin by gathering structured, domain-specific data that reflects exactly the type of knowledge your model needs. These documents will help the model identify your style and tone. Good sources include:

Company FAQs and detailed product documentation.

Past customer support conversations and tickets.

Sales records, call transcripts, or CRM notes.

Once collected, format this data into structured prompts and responses. Then use these carefully curated examples to further train your existing model, allowing it to recognize the nuances, terminology, and scenarios specific to your company or industry.

Think of this process as giving your AI an intensive onboarding, making it deeply familiar with your business, i.e. ensuring it consistently delivers accurate, relevant, and tailored answers.

If this was overwhelming, don’t worry! In the upcoming posts, I’ll dive deeper into both RAG and Fine-Tuning in order to help you understand this in detail, and apply this in your day-to-day as well!

💡 TL;DR Recap

Here’s your takeaway:

Prompting makes your AI communicate clearly.

Fine-tuning empowers your AI with your specific expertise.

RAG keeps your AI responses accurate and relevant.

Think of AI outputs as cooking a delicious meal:

Great AI Results = Fresh Ingredients (RAG) + Specialized Recipes (Fine-tuning) + Beautiful Plating (Prompt Engineering)

🛣️ Final Thought

The real magic happens when you don't rely solely on prompt hacks.

Instead, level up at every layer: data freshness, deep specialization, and clear communication.

That’s how you truly unlock the power of Large Language Models.

And when you do, your AI becomes more than just a tool, it becomes an invaluable partner that you can consistently rely on.

Next on LLMentary:

We'll dig deeper into Fine-Tuning and RAG to understand what they mean in detail and under what circumstances you must use these techniques… so that your AI can always stay ahead of the curve with real-time insights.

Until then, stay curious.

SPECIAL BENEFITS TO THOSE WHO REFER PEOPLE TO THIS BLOG!

Earn the following benefits when more of your friends use your referral link to subscribe, you’ll receive special benefits.

Get Meta-Prompting Info Pack for 3 referrals - This pack holds everything you need to set yourself up to make the best prompts and get the best quality outputs from your LLM. I’ve also curated a user-for-all ‘Meta Prompt’ that you can use to leverage ChatGPT to help you improve your prompting

Get a 30-minute Zoom chat with me for 10 referrals - This is your chance to ask anything… whether it’s help with prompt engineering, AI strategy for your business, career advice in the AI space, or just brainstorming how you can make AI work better for you.

Get a Bespoke Prompting booklet for 20 referrals - Tell me what you want to achieve with AI, your industry or project, and any specific challenges you face. From our conversation, I’ll craft a custom prompt booklet designed just for you, packed with actionable templates and strategies you can immediately apply

Share this with anyone you think will benefit from what you’re reading here. The mission of LLMentary is to help individuals reach their full potential. So help us achieve that mission! :)